The Limitation of Intelligence

When it comes to AI, everything boils down to intention and context. It doesn’t matter how advanced large language models (LLMs) become or how much data we feed them—without properly orchestrating intent and handling context, they’re simply tools with untapped potential.

This realization struck me early in my journey with LLMs. Back then, I was exploring what many now call "prompt engineering." But engineering feels like the wrong term—it’s like calling someone a “story engineer” or “relationship engineer.” What we’re really talking about is communication.

In the beginning, I immersed myself in understanding these LLMs by using them constantly. Through this, I learned that the thing I come back to as the two bases to unlocking their potential lies in mastering intention and context.

Context

What separates human ability from AI? It’s not intelligence—it’s context. Humans draw from a vast and deeply personal reservoir of experiences: the sights we’ve seen, the feelings we’ve felt, the stories we’ve lived. These subjective experiences create a context far richer than anything an AI can access, even with all the data on the internet.

Consider this: a book or movie resonates not because it perfectly replicates life, but because it sparks something in the reader or viewer—memories, emotions, imagination. It’s this shared experience, the context window, that AI has yet to fully grasp.

So it becomes extremely important that we try to give as much context as possible to each interaction and execution of an LLM. Also I’ve found that if you are clear enough about the context, you generally don’t really have to think so much about intent, since it is usually a logical conclusion of the context.

Training Beyond Words on the Internet

There’s a belief that we’re running out of data to train models on. I think that’s shortsighted. The issue isn’t the quantity of data but its nature. Most AI training relies on language—a human construct filled with nuance, ambiguity, and limitations.

What if AI could learn from the living world? Imagine training a model not just on text but on the logic of nature: the growth of a seed, the buzzing dance of bees, or the evolution of ecosystems. By stepping beyond human language and into the processes of life itself, we could unlock unimaginable potential.

AI is already breathtakingly capable. With minimal input, it can mimic human expression to an extraordinary degree. But what happens when it gains access to more than our “high-level communication protocols”? What happens when it taps into the raw process?

With Triform we are currently working on models to help build AI-Agents, as well as converting code between languages, including pseudo-code. We believe that this can be done through actually allowing training through doing and self evaluations. Of course these pipelines have to be very well structured and combined using LLM’s, smaller models, execution evaluations and evaluate both the process and the execution. Then utilize the results as training data. This allows for training that off course is resource intensive, but it’s not dependent on datasets, the execution creates the datasets.

The Power of Intention and Context

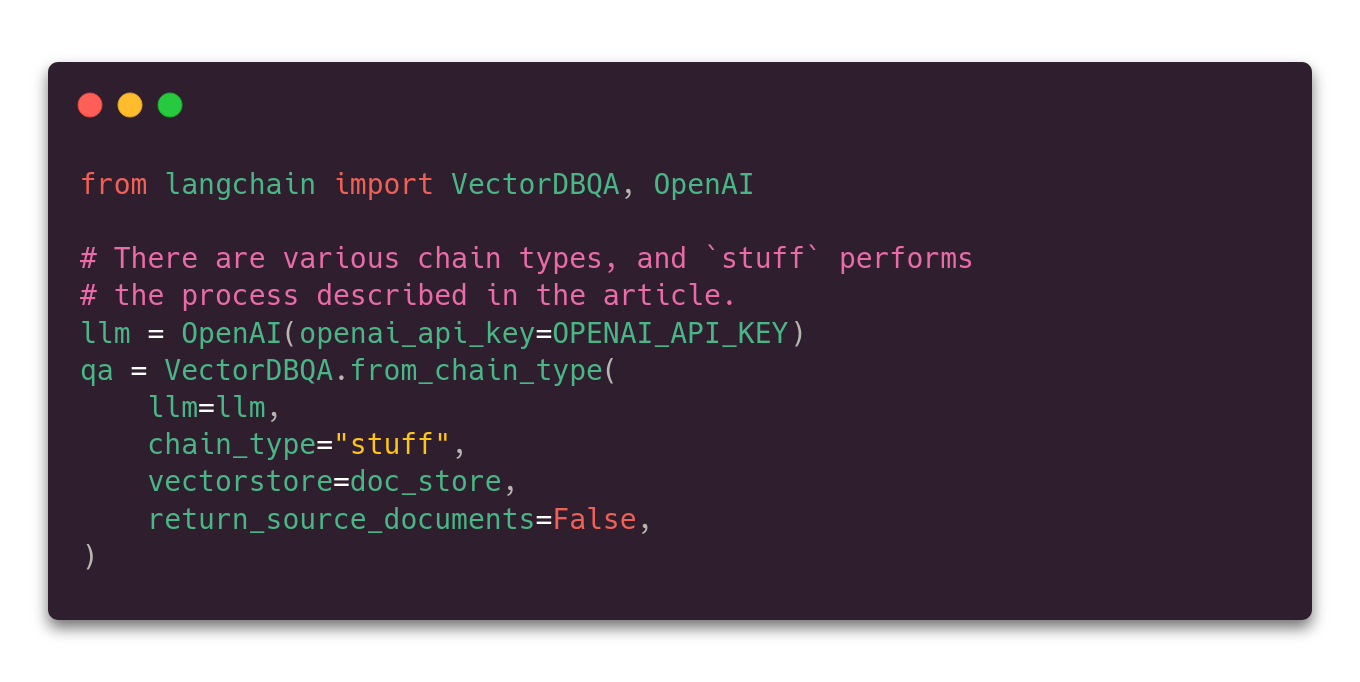

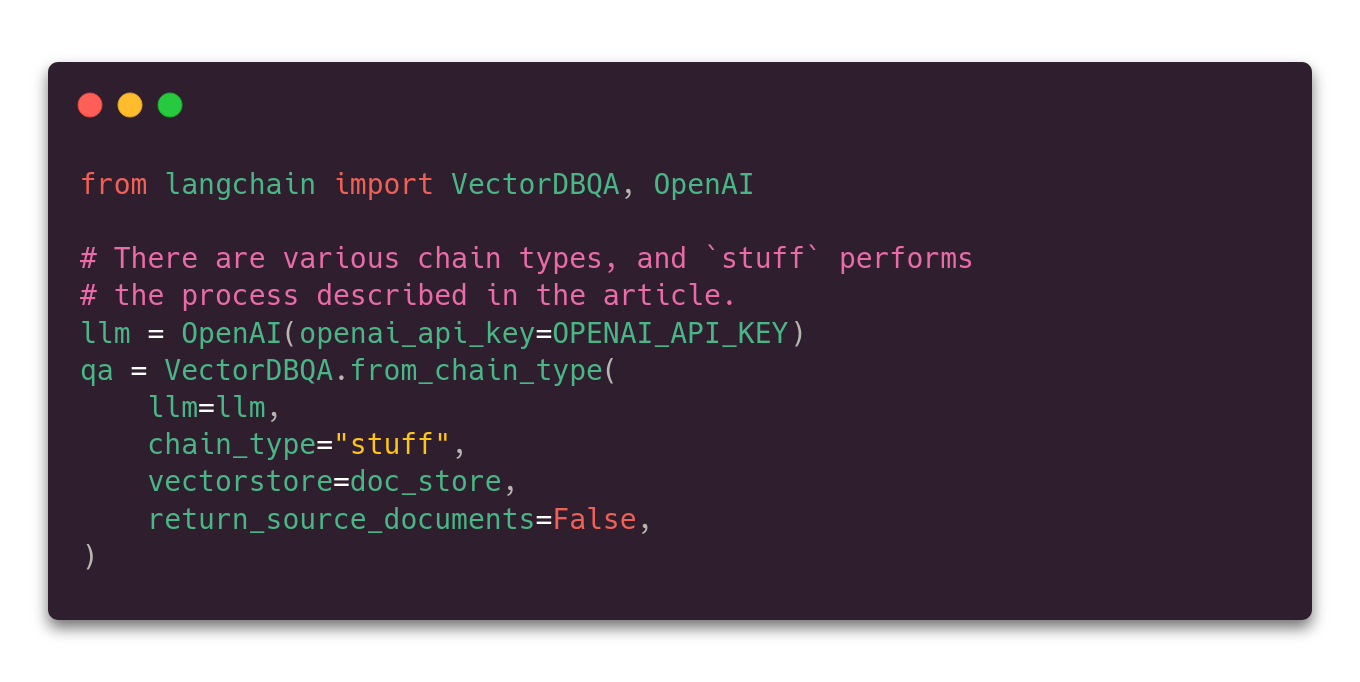

As I built my first five apps with LLMs and incorporated vector databases to enhance contextual relevance, I became increasingly fascinated. The magic lies not in the models themselves but in how we steer them—how we supply context and frame intention to allow the models to deliver high value responses and results.

This reminds me of a book I read as a child, The Belgariad. In its world of magic, power is wielded through the “Will and the Word.” The idea strikes me as symbolically on the spot—not just for how humans influence each other, but also for how we influence AI.

One observation that stands out when it comes to all major implementations today: AI scripts are small. Most of the computation happens inside the LLMs, while the orchestration—the intention and context—is the only thing happening outside. Python, despite its imperfections, dominates this space because it’s the one of the closest things we have to a natural language in programming.

What I started doing was creating a library of all the different actions that all in some way handled and communicated intent and context between the users, the app, the vector databases and the LLM’s. Turned out that instead of having to manage 200+ integrations, I could get it down to just 30 different modules, combined into about 15 different flows. This was possible by abstracting the layers that were app or function specific and ensuring that these instead became part of the data going between the actions, rather than being hard coded in the actions themselves. The management of the whole setup became immensely easier and I could focus on perfecting actions and modules that had effect over several functions.

Introducing Triform: A Platform for Intent and Context

This realization became the foundation for Triform, our platform for building and orchestrating AI Agents and integrations. At its core, Triform enables developers to create small, modular building blocks we call “actions.” These actions define the rules, logic, and intent that guide LLMs. We have over 900 pre-built actions in the form of Python templates that you can select from and build, but you are always in 100% control, and the code is always yours to own and modify.

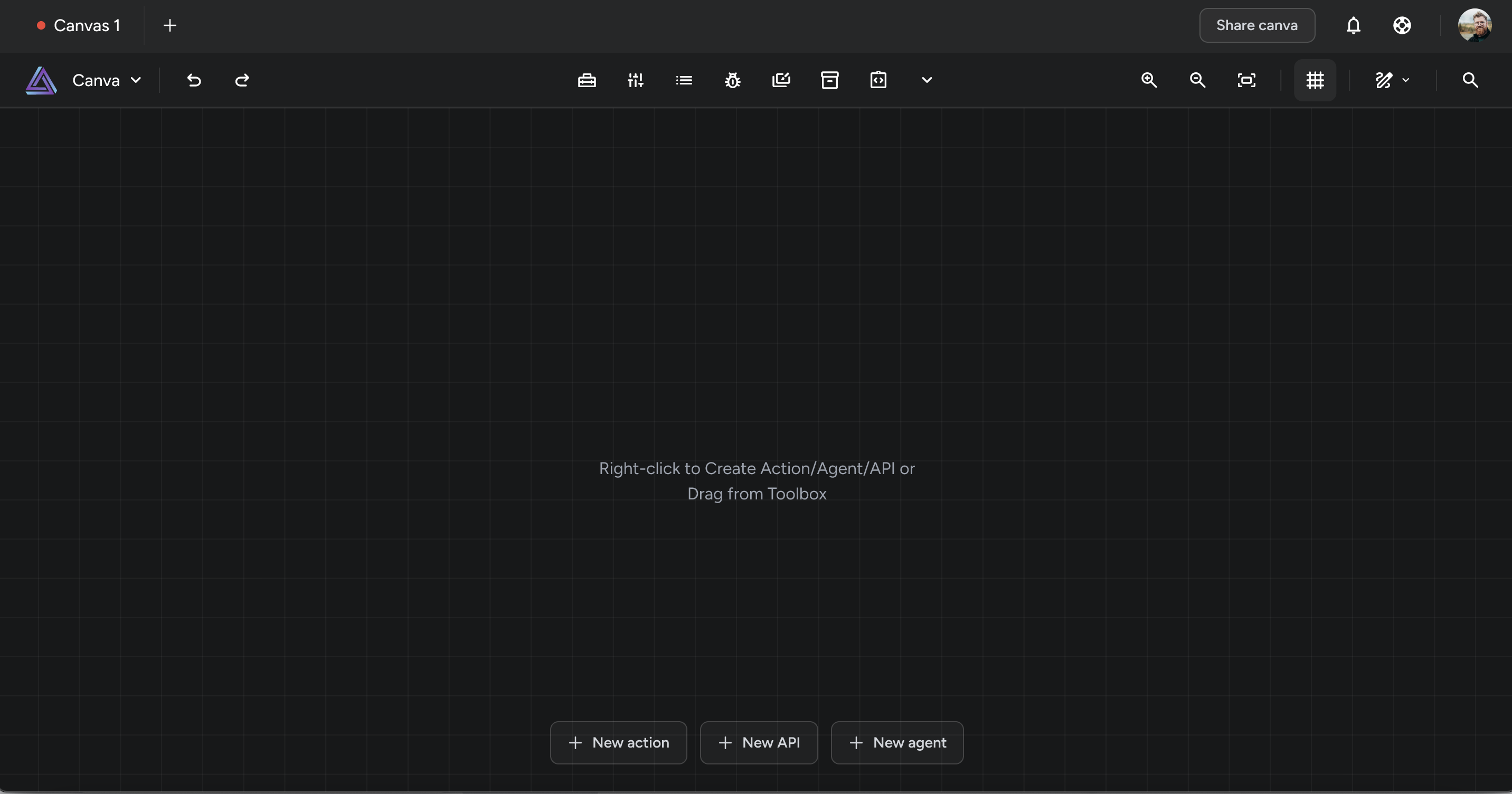

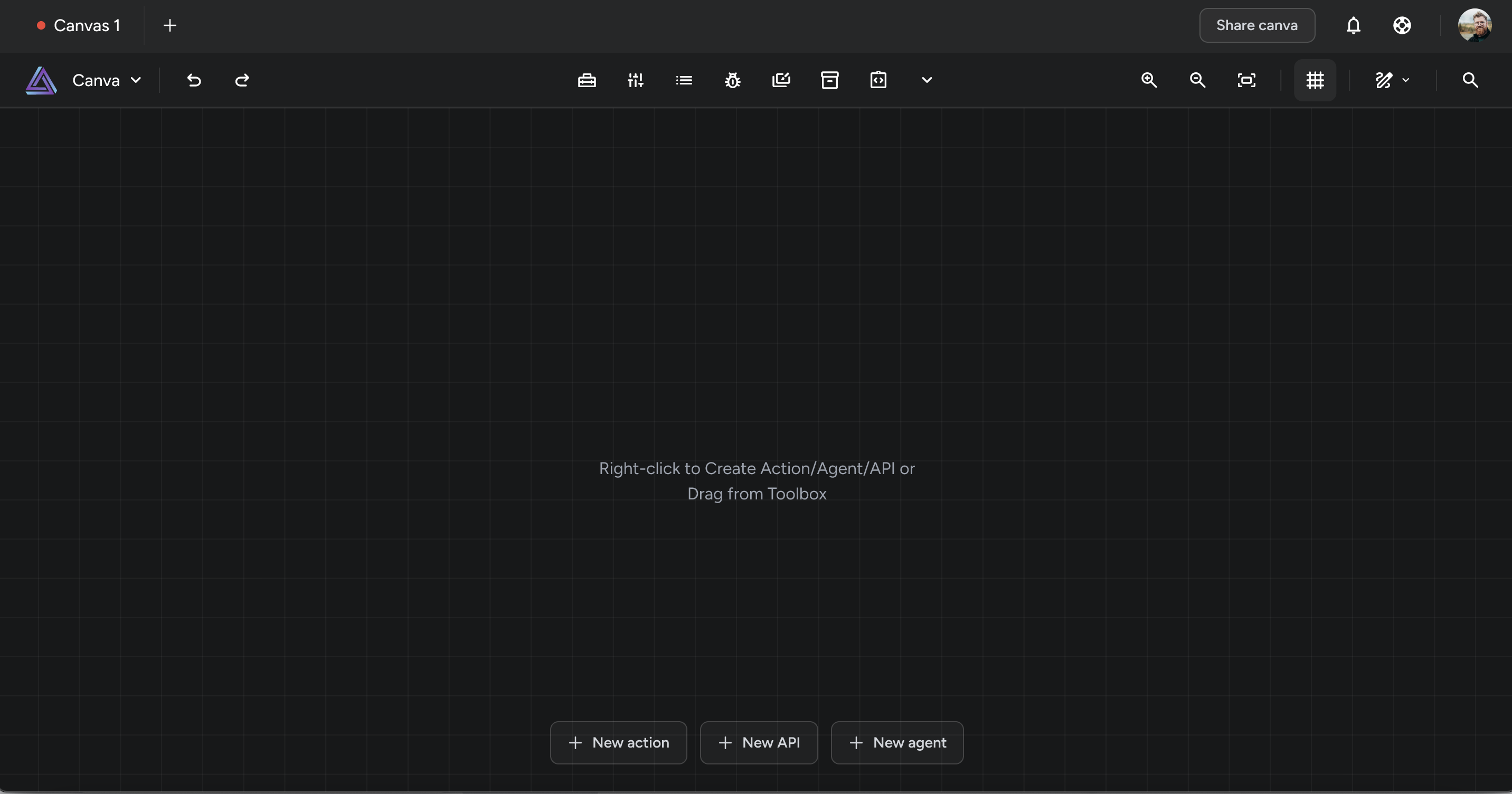

Triform is a blank canvas—an open playground for developers to build, share, and refine their tools, workflows and agents. While we provide templates for common use cases, we never limit creative expression. Our philosophy is simple: remove complexities while empowering developer freedom.

With just a few building blocks, developers can combine actions into agentic flows, or enable them for autonomous agents, creating advanced AI implementations to automate virtually anything. Think of it as assembling a custom Lego set: each piece adds to your toolbox, ready to be reused, repurposed, and specialized for any need.

In our canvas we combine coding, testing, building, deploying, monitoring and iterating all in one view. As programming with and towards AI is a completely new experience, we need to innovate the developer experience and the way that we build software. The biggest difference since before is that we now most of the time don’t have a fixed input or output, but rather a living communication that can take so many different ways. We are working with probabilistic and heuristic results instead of deterministic software, all complicated by the black box nature of LLM’s. This is where we think it is crucial to bring all the steps closer and more integrated than ever, allowing for direct real time feedback and insights into the performance and the results of every change that is being done. Doing everything we can to maximize the I/O between you as conductors and the tools that are at your hands.

We believe that if this is done correctly, this is where we will see the 100x developers grow and flourish.

We want to enable the one man unicorn, and we are sure that the one man unicorn is a developer.

A Personal Reflection

I’ve never been more excited. Watching this space evolve, contributing to it, and being alive during such transformative times is a privilege. The coming years will be nothing short of extraordinary, and I can’t wait to see where they take us. Understanding and working with AI has given me new insights about both us as humans, but maybe even more so about myself as a person. I do believe that the journey of discovering AI, is equally a journey of self discovery. Getting a deeper understanding for our own intelligence, context and intention.

Here’s to building with intention and context—and to shaping a future that is built with focus on constructive effect, for all.

Best Regards,

Iggy